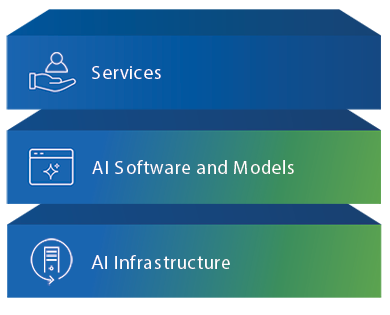

The Dell AI Factory with NVIDIA is an end-to-end enterprise AI solution that unifies Dell’s AI-optimized infrastructure with NVIDIA’s accelerated computing and enterprise AI software to simplify and scale AI across your organization. It integrates compute, storage, networking, PCs/workstations, and services with NVIDIA AI Enterprise, NVIDIA NIM microservices, and the NVIDIA Spectrum X high-speed Ethernet fabric to deliver a full-stack, production-ready platform. It’s designed to help teams quickly identify, develop, deploy, and operate AI use cases with consistent security, governance, and manageability from desktop to data center to edge and cloud. Dell and NVIDIA co-engineered the platform to be the industry’s first end-to-end enterprise AI solution focused on making AI deployments easier and faster.